The following is a guest blog post by Robert Kelchen, graduate student in Educational Policy Studies at UW-Madison, and a frequent co-author of mine. --Sara

I was pleased to see the release of Education Sector’s report, “Debt to Degree: A New Way of Measuring College Success,” by Kevin Carey and Erin Dillon. They created a new measure, a “borrowing to credential ratio,” which divides the total amount of borrowing by the number of degrees or credentials awarded. Their focus on institutional productivity and dedication to methodological transparency (their data are made easily accessible on the Education Sector’s website) are certainly commendable.

That said, I have several concerns with their report. I will focus on two key points, both of which pertain to how this approach would affect the measurement of performance for 2-year and 4-year not-for-profit (public and private) colleges and universities. My comments are based on an analysis in which I merged IPEDS data with the Education Sector data to analyze additional measures; my final sample consists of 2,654 institutions.

Point 1: Use of the suggested "borrowing to credential" ratio has the potential to reduce college access for low-income students.

The authors rightly mention that flagship public and elite private institutions appear successful on this metric because they have a lower percentage of financially needy students and more institutional resources (thus reducing the incidence of borrowing). The high-performing institutions also enroll students who are easier to graduate (e.g. those with higher entering test scores, better academic preparation, etc) which increases the denominator in the borrowing to credential ratio.

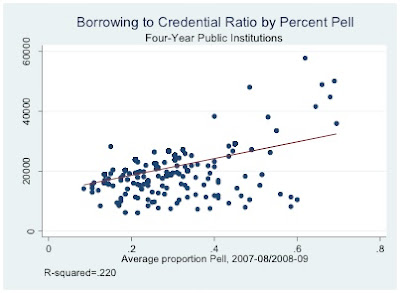

Specifically, the correlations between the percentage of Pell Grant recipients (average of 2007-08 and 2008-09 academic years from IPEDS) and the borrowing to credential ratio is 0.455 for public 4-year and 0.479 for private 4-year institutions, compared to 0.158 for 2-year institutions. This means that the more Pell recipients an institution enrolls, the worse it performs on this ratio.

While even though Carey and Dillon focus on comparing similar institutions in their report (for example, Iowa State and Florida State), it is very likely that in real life (e.g. the policy world) the data will be used to compare dissimilar institutions. The expected unintended consequence is “cream skimming,” in which institutions have incentives to enroll either high-income students or low-income students with a very high likelihood of graduation. (Sara and I have previously raised concerns about “cream skimming” with Pell Grant recipients in other work.)

The graphs below further illustrate the relationship between the percentage of Pell recipients and the borrowing to credential ratio for each of the three sectors.

There is also a fairly strong relationship between a university’s endowment (per full-time equivalent student) and the average borrowing to credential ratio. Among public 4-year universities, the correlation between per-student endowment and the borrowing to credential ratio is -.134, suggesting that institutions with higher endowments tend to have lower borrowing to credential ratios. The relationship at private four-year universities is even stronger, with a correlation of -.346. For example, Princeton, Cooper Union, Caltech, Ponoma, and Harvard are all in the top 15 for lowest borrowing to credential ratios.

The relationship between borrowing to credential ratios and standardized test scores is even stronger. The correlations for four-year public and private universities are -.488 and -.589, respectively. This suggests that low borrowing to credential ratios are in part a function of student inputs, not just factors within an institution’s control. In other words, the metric does not solely measure college performance.

It is critical to note that the average borrowing to credential ratio should be lower at institutions with more financial resources and who enroll more students who can afford to attend college without borrowing. However, institutions who enroll a large percentage of Pell recipients should not be let off the hook for their borrowing to credential ratios. These two examples highlight the importance of input-adjusted comparisons, in which statistical adjustments are used so institutions can be compared based more than their value-added than their initial level of resources. The authors should be vigilant to make sure their work gets used in input-adjusted comparisons rather than unadjusted comparisons. Otherwise, institutions with fewer resources will be much more likely to be punished for their actions even if they are successfully graduating students with relatively low levels of debt.

Point 2: The IPEDS classification of two-year versus four-year institutions does not necessarily reflect a college’s primary mission.

IPEDS classifies a college as a 4-year institution if it offers at least one bachelor’s degree program, even if the vast majority of students are enrolled in 2-year programs. Think of Miami Dade College, where more than 97% of students are in 2-year programs but the institution is classified as a 4-year institution.

For the purposes of calculating a borrowing to credential ratio, the Carnegie basic classification system is more appropriate. Under that system an institution is classified as an associate’s college if bachelor’s degrees make up less than ten percent of all undergraduate credentials. The Education Sector report classifies 60 institutions as four-year colleges that are Carnegie associate’s institutions.

This classification decision has important ramifications for the borrowing to credential comparisons. The average borrowing to credential ratio by sector is as follows:

Two-year colleges, Carnegie associate’s: $6,579 (n=942)

Four-year colleges, Carnegie associate’s: $13,563 (n=60)

Four-year colleges, Carnegie bachelor’s or above: $23,166 (n=1,421)

Ten of the twelve and 20 of the top 40 four-year colleges with the lowest borrowing to credential ratios are classified as Carnegie associate’s institutions. For example, Madison Area Technical College is 54th on the Education Sector’s list of four-year colleges, but is 564th of 1,002 associate’s-granting institutions. These two-year institutions with a small number of bachelor’s degree offerings should either be placed with the other two-year institutions or in a separate category. Otherwise, anyone who wishes to rank institutions based on their classification would be comparing apples to oranges.

In conclusion: the effort in this report to measure institutional performance is a laudable one. But the development and use of metrics is challenging precisely because of their potential for misuse and unintended consequences. Refining the proposed metrics as described above may make them more useful.

It Rhymes With 'Tool'

UPDATED, 8/11/2011, 1:10 pm

UPDATED, 8/11/2011, 1:10 pmThursday morning in Washington DC -- the only city that could host such a vacuous, inane event -- the Thomas B. Fordham Institute is hosting (the hopefully one-off) "Education Reform Idol." The event has nothing to do with recognizing states that get the best results for children or those that have achieved demonstrated results from education policies over time -- but simply those that have passed pet reforms over the past year.

It purports to determine which state is the "reformiest" (I kid you not) with the only contenders being Florida, Illinois, Indiana, Ohio and Wisconsin and the only judges being: (1) a representative of the pro-privatization Walton (WalMart) Family Foundation; (2) the Walton-funded, public education hater Jeanne Allen; and (3) the "Fox News honorary Juan Williams chair" provided to the out-voted Richard Lee Colvin from Education Sector.

With the deck stacked like that, Illinois is out of the running immediately because its reforms were passed in partnership with teachers' unions. Plus it has a Democratic governor. Tssk, tssk. That's too bad, because Illinois represents the most balanced approach to education and teaching policy of the five states over the past year. And the absence of a state like Massachusetts from the running is insane. It has the best NAEP scores of any state and has a long track record of education results from raising standards and expectations, not by attacking teachers or privatizing our schools. But that's not the point here, of course. This is ALL politics. [UPDATE 8/11/2011: Yes, all politics. Mike Petrilli of Fordham says that "the lesson of Education Reform Idol" is --- ba-ba-ba-baaah ... ELECT REPUBLICANS. "When Republicans take power, reforms take flight."]

So I digress.... The coup de grace of ridiculousness for me is the inclusion of Wisconsin among the list of "contenders." What exactly has Scott Walker and his league of zombies actually accomplished for education over the last seven-and-a-half months OTHER THAN eliminating collective bargaining rights, a historic slashing of state school aids, and a purely political expansion of the inefficacious school voucher program?

What's even worse than the inclusion of Wisconsin among the nominees is the case made by Scott Walker's office for the 'reformiest' award. As a policy advisor to the former Wisconsin governor, I am amazed by the brazenness and spin from Walker's office. I would expect nothing less from a political campaign. But someone's gotta tell these folks that while they theoretically represent the public trust, the content of their arguments suggests we can't trust them as far as we can throw them. And here in cheese curd land, that ain't very far.

A quick look at Walker's argument reveals an upfront invocation of Tommy Thompson (Wisconsin's version of Ronald Reagan) to pluck at Badgers' heart strings and make them long for the good old days of the 1990s (when the rich paid their fair share in taxes). It is soon followed by the refuted and refuted claims that Walker's deep education cuts "protect students in the short term" and give districts "tools" to manage the fiscal slaughter. Just read the well-respected Milwaukee school superintendent's opinion of such "tools." Then there's this gem: "Districts immediately began to set aside more time for teacher collaboration as well as money for merit pay." I'd LOVE to see the data behind this claim because as I am aware there is no state survey that measures collaborative time for teachers for starters. Walker's staff probably lifted it from a single school district's claims detailed in this Milwaukee Journal-Sentinel story -- claims trumpeted by dozens upon dozens of right-wing bloggers such as Wisconsin's own Ann Althouse -- claims which since have been exposed as "literally unbelievable".

The irony is that this event is taking place in DC just two days after the recall elections of six seemingly vulnerable, incumbent Republican state senators. The repudiation of Walker's slash-and-burn policies will be testament enough to the destructiveness of his leadership both for public education and for the Badger State as a whole. In Wisconsin, recall would appear to be a far more effective 'tool' than the tools tentatively running the show under the Golden Dome in Madison.

[UPDATE 8/11/2011: For anyone who cares ... Indiana apparently is the "reformiest" state. By reformers' preferred metrics, I believe this means that Indiana will have the top NAEP scores in the nation next time 'round. Right?]

Image courtesy of Democurmudgeon

Subscribe to:

Posts (Atom)